Site Reliability Engineering - How Google Runs Production Systems

Ch10: Practical Alerting from Time-Series Data

Borgmon clusters could stream with each other, upper-tier filter the data from lower-tier and aggregate on it. Thus, the aggregation hierarchy provides metrics with different granularity. (DC -> campus -> Global)Ch19: Load Balancing at the Frontend

Traffic are different. Search requests want latency, Video requests want throughput.- DNS layer LB

- Clients don't know the closest IP -> anycast -> a map of all networks' physical locations (but how to keep it updated)

- DNS middleman's cache (don't know how many users will be impacted)(need a low TTL for propagation)

- DNS needs to know DC's capacity

- Virtual IP layer LB: LVS packet encapsulation GRE(IP inside)

ch20: Load Balancing in the Datacenter

- Active-request limit: client marks backend as bad if its backlog is high. But many long queries will fail client quickly

- Backend has states: healthy, unresponsive, lame duck (stop sending requests to me)

- Subsetting: one client could only talk with limited backends to prevent "backend killer" client

- Weighted Round Robin on backend-provided load information

ch21: Handling Overload

- Client-side adaptive throttling: Preject = max(0, req - K*accept/req)

- Request is tagged with priority

- When to retry

- per-request retry budget is 3

- per-client retry budget is 110% (avoid 3X load)

- backend will give "overloaded; don't retry" if the backends' retry histogram is heavy

- Put a batch proxy if clients have too many connections on backends

ch23: Managing Critical State: Distributed Consensus for Reliability

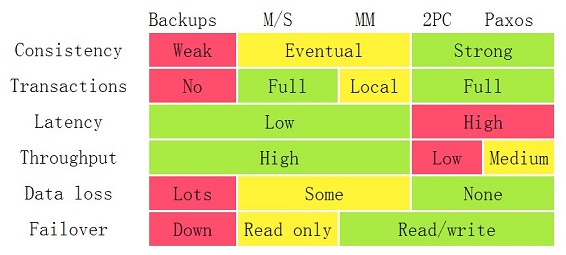

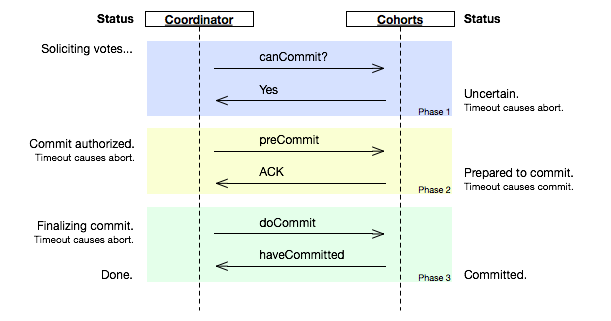

Whenever you see leader election, critical shared state, or distributed locking, use distributed consensus system.Different algorithms: crash-fail or crash-recover? Byzantine or non-Byzantine failures?

Fundamental is replicated state machine (RSM): is a system that executes the same set of operations, in the same order, on several processes.

The consensus algorithm deals with agreement on the sequence of operations, and the RSM executes the operations in that order.

What RSM could build?

- Replicated Datastores and Configuration Stores: RSM provides consistency semantics. Other (nondistributed-consensus-based) systems rely on timestamps (refer Spanner)

- Highly Available Processing: Leader election of GFS to ensure only one leader for coordinating workers

- A barrier: blocking a group of processes from proceeding until some condition is met -> MapReduce's phases and distributed locking

- Atomic broadcast: for queueing, ensure messages are received reliably and in the same order

Performance

- Network RTT: use regional proxies to hold persistent TCP/IP connections, and it could also serve for encapsulating sharding and load balancing strategies, as well as discovery of cluster members and leaders

- Fasst Paxos: each client sends Propose directly to each member

- Stable Leaders: but leader will be a bottleneck

- Batching jobs

- Disk: consider batching/combine transaction log into a single log

ch24: Distributed Periodic Scheduling with Cron

- Decouple processes from machines: sending RPC requests instead of launching jobs

- Tracking the state of cron jobs:

- where

- Store data externally like GFS or HDFS

- Store internally as part of the cron service (3 replicas), store snapchat locally, do not store logs on GFS (too slow)

- what

- when it is launched

- when it has finished

- Use Paxos:

- Single leader launches cron job (or through another DC scheduler), only launch jobs after Paxos quorum is met (synchronously) is it slow?

- Followers need to update each jobs' finish time in case leader dies

- Resolving partial failures:

- all external operations must be idempotent; or the state is stored externally unambiguously

- must record launched schedule time to prevent double scheduling. Trade-off between risk double launch vs skipping a launch

ch25: Data Processing Pipelines

- Task Master(model): hold all jobs states (pointers) in RAM, and actual input/output data is stored in a common HDFS

- Workers(view): stateless

- Controller: auxiliary system activities: runtime scaling, snapshotting, workcycle state control, rolling back pipeline state

- Worker output through configuration tasks creates barriers on which to predicate work

- All work committed requires a currently valid lease held by the worker

- Output files are uniquely names by the workers

- The client and server validate the Task Master itself by checking a server token on every operation

- Task Master store journals on Spanner: achieve global availability, global consistency, low-throughput filesystem.

- Use Chubby to elect writer and persist result in Spanner

- Globally distributed Workflows employ 2+ local Workflows running in distinct clusters

- Each task has a "heartbeat" peer, upon peer timeout it will resume the task instead